Books

Class 2. Classification

In computational science, curve fitting falls into the category of non-linear approximation. Unlilke what we have discussed in Class 1. Regression, the functional shape now has one of the two following shapes. Just to make the illustration simple, let’s think about simpler format, linear format. For the 3rd order function w.r.t. $x$, you need 4…

Class 1. Regression problems

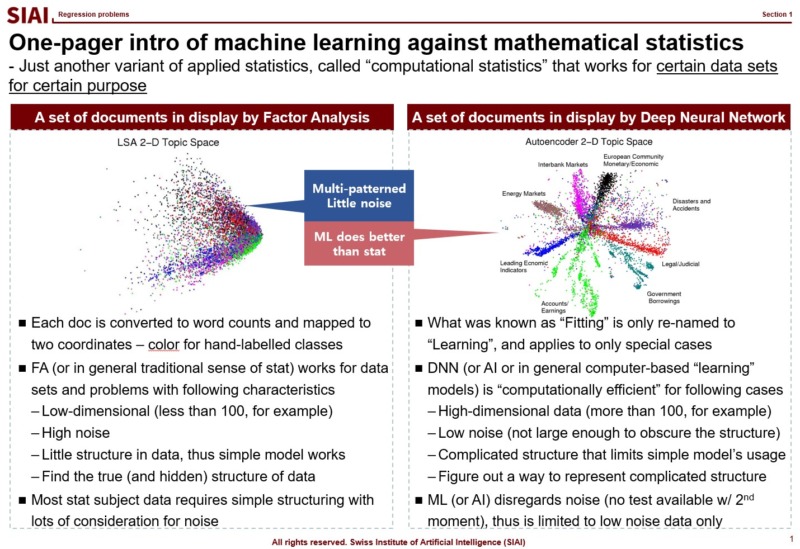

Many people think machine learning is some sort of magic wand. If a scholar claims that a task is mathematically impossible, people start asking if machine learning can make an alternative. The truth is, as discussed from all pre-requisite courses, machine learning is nothing more than a computer version of statistics, which is a discipline…

Class 8. Summary of Deep Learning

Model examination is available from above link. The last class solves previous year’s (or similar) exam while covering key components of the earlier classes.

Class 7. Deep Generative Models

Generative models are simply repeatedly updated model. Unlike discriminative models that we have learned from all previous lectures, such as linear/non-linear regressions, SVM, tree models, and Neural Networks, Generative models are closely related to Bayesian type updates. RBM (Restricted Boltzmann Machine) is one of the example models that we learned in this class. RNN, depending…

Class 6. Recurrent Neural Network

Recurrent Neural Network (RNN) is a neural network model that uses repeated processes with certain conditions. The conditions are often termed as ‘memory’, and depending on the validity and reliance of the memory, there can be infinitely different variations of RNN. However, whatever the underlying data structure it can fit, the RNN model is simply…

Class 5. Image recognition

As shown by RBM’s autoencoder versions, if the neural network is well-designed, it can perform better than PCA in general when it comes to finding hidden factors. This is where image recognition relies on neural network. Images are first converted to a matrix with RGB color value, (R, G, B), entries. The matrix is for…

Class 4. Boltzmann machine

Constructing an Autoencoder model looks like an art, if not computationally heavy work. A lot of non-trained data engineers rely on coding libraries and a graphics card (that supports ‘AI’ computation), and hoping the computer to find an ideal Neural Network. As discussed in previous section, the process is highly exposed to overfitting, local maxima,…

Class 3. MCMC and Bayesian extensions

Bayesian estimation tactics can be used to replace arbitrary construction of deep learning model’s hidden layer. In one way, it is to replicate Factor Analysis in every layer construction, but now that one layer’s value change affects the other layers. This process goes from one layer to all layers. What makes this job more demanding…

Class 2. Autoencoder

Feed forward and back propagation have significant advantage in terms of speed of calculation and error correction, but it does not mean that we can eliminate the errors. In fact the error enlarges if the fed data leads the model to out of convergence path. The more layers there are, the more computational resources required,…

Class 1. Introduction to deep learning

As was discussed in [COM502] Machine Learning, the introduction to deep learning begins with history of computational methods as early as 1943 where the concept of Neural Network first emerged. From the departure of regression to graph models, major building blocks of neural network, such as perceptron, XOR problem, multi-layering, SVM, and pretraining, are briefly…